Relational Joints Framework

Mapping how we engage with (game) design

by Simon Hoffiz / 2021-0914

Introduction

Thanks to technology and the development of culture, gaming is more present and celebrated than ever; there are thousands of board games to choose from, an infinite number of video games coming out across a multitude of platforms every year, including PC, consoles, phones, and headsets. We also have game streams, e-sports, and even gaming celebrities. The presence of games in our culture is so strong that it is easy to superficially contemplate what a game is, how to make a game, or why we play games. However, as the academic fields of psychology, design and media studies can attest to, questions like why we play, how games affect us, and what is the place and potential of games as media, are not easy to answer and no trivial matter to consider. If we also look at the gaming industry (from AAA to indie developers), we can quickly see not just the market reflecting the interest in games, but also the enormous effort that is needed to create them and keep exploring their potential. Games, and play in general, are no longer considered an activity for the unfocused and undetermined in life but is now recognized as a fundamental part of human development and experience; we NEED to play, we ARE MADE to play. As the interest in games grow, so do the scope and types of games, giving us the opportunity to see the complexity and nuance required to make a good game. Games are now recognized as a rightful design field of its own that has to consider goals, timetables, and budgets like any other industry, but also consider human psychology, technology, and aesthetics for a good design.

Now that game design is its own discipline of study discussed across books, videos, and conferences, I recently came to the realization that the tools available to systemically think about and discuss the entire design of a game at once are limited. For example, we are usually good at analyzing very specific design instances of a game in isolation, but we are less successful at systemically talking about the design of a game as a whole before being overwhelmed by the sheer number of components and complexities entailed in its design. The lack of a good approach to helps us conceptually organize the whole design within a system of documentation results in limited discussions of the design, constrain our communication with other designers, and therefore limit our understanding and development of the design itself.

That is where I come in. Hi! My name is Simon Hoffiz, and I am an architect with a strong interest in game design. In this article I offer a solution to this problem by introducing The Relational Joints Framework, a method that aims to serve as a tool for designers to identify, organize, and analyze specific interfaces (“joints”) between directly “related” elements of a design that have to be successfully navigated by the user to effectively operate the design. The use and potential of the Relational Joints Framework extends past just mere categorization or mapping of the design skeleton for a more holistic understanding of the game, serving as the foundation for modeling cognitive requirements at specific parts of the game experience. This framework also helps designers to visualize which design elements the player is engaging with and at what moment in the design. Additionally, it provides new terminology for a more accurate description of the design, an objective system to compare designs against each other, visual means to model cognitive performance of the design, as well as derivative tools to effectively balance the cognitive requirements of a design in development to achieve a better design. As you will see, this model can be applied past the game design field into any other field of design, from architecture to user experience, and product design.

In part 01 of this article, we will explore the basic concepts that serve as a foundation to understand the Relational Joints Framework. Part 02 shows how this framework uses the concepts from Part 01 to breakdown the design experience into its basic design units/interfaces (“joints”). Part 02 also explains how these “joints” are organized to determine the emergent dynamics within the design (i.e., interactions and relations between joints) to help the designer understand how a user navigates and inhabits a design. Finally, in Part 03, I provide practical examples that shows how the Relational Joints Framework can be applied as a design tool and an analysis apparatus. Without further ado, let’s dive into it!

Part 01: Intro to the Fundamentals

Affordances & Signifiers

Isn’t it great when you know what an object does or what you can do with it without reading the instruction manual? This is a key feature of a good design, so much so that the phenomenon has been studied in psychology and design theory and can be generally summarized in two terms: affordances and signifiers.

Affordances are all the emergent actions that become possible from the relationship of a particular agent (i.e., human, animal, robot) and an object/environment. Let’s say I present to you a chair and a basketball and ask you to list all the possible ways we could use each object. We can say that a chair (object) affords to be seated on (by the agent, the human), used as a table, as a coat hanger, a door stopper, a short ladder, etc. On the other hand, the basketball affords to be thrown, bounced, passed, seated on, etc. All of the unique possible actions between the agent and these objects are considered affordances. It is important to note that the emergent affordances vary according to the agent that interacts with the object. For example, a doorknob affords to be operated by a human but not a dog due to the lack of hands. In contrast, a doggy door affords passage for a dog but not a human because of the size difference.

The term “affordances” was coined by James J. Gibson and best defined in his book “The Ecological Approach to Visual Perception” for the psychology field, but it was then stretched into the design field by Don Norman. Norman further developed the term affordances to include the concept of signifiers where he stated that “form implies function” in his book “The Design of Everyday Things”. Affordances are not determined by the features of an object, but these features can point to the specific way the object was designed to be used, and these “pointers” are called signifiers. Signifiers are characteristics of an object that stand out and guide the user to interact with the design the way the designer intended it for. Signifiers are generated through the design’s physical characteristics and constraints, typologies, user’s culture, and individual experiences that inform how to interpret the human-object interaction. Signifiers are the reason why when thinking of the ways a chair and a basketball can be used, you most likely first thought of “sitting” on the chair and “throwing” the basketball, even though you can also throw a chair and sit on the basketball or do dozens and dozens of other actions with them.

Example of Affordances & Signifiers

Let’s use a door system as an example to explore how affordances and signifiers are found and interacted with in a design. First, let’s look at the affordances of a door system. A door system affords: (1) the manipulation of the knob, (2) the engagement of its locking system, (3) panel rotation on axis (hinges), and (4) passage through. What if I presented you with a door like the one featured in the image below and asked you to explain how to operate it by just looking at it?

You are not able to describe much about how the door functions because the door itself, its design, doesn’t have obvious features that communicate its functions; it’s just a plain surface with seams, it’s devoid of signifiers. The most you can deduct from this design is that the door is located at that specific spot on the wall, and the overall panel dimensions. While this door might look really nice, it could be a very frustrating door to interact with on a daily basis. In line with this, notice how the absence of a knob/handle also deprives us from understanding how the door operates. A simple doorknob communicates a vital piece of information, including whether the door panel is to swing or slide and in which direction. Just take a look at the various doorknob/handle designs on the image below. One can quickly understand from each doorknob design a distinct way in which a door it is to be operated.

For example, when we encounter a vertical grab bar on a door, we normally interpret that physical feature to mean “pull the door”. Similarly, a flat meal plate on a door normally signals us to place our hands there to push the door (see image below). These innate psychological leanings that help us interpret and navigate the world around us are why and how signifiers work; they become the design features that guide the user to operate the design correctly, and the designer’s tools to guide the user.

We encounter affordances and signifiers every day because they are essential in every object’s design. A mug tells us where to specifically grab it when its contents are hot. A computer mouse is designed to comfortably receive the hand on top and to be dragged on a horizontal surface. Similarly, the correct way of grabbing a game controller is evident, fitting in the hands in a specific position to comfortably reach for all the buttons.

Affordances & Signifiers in the Digital Space

Affordances and signifiers are not only used in in the realm of physical objects, but also digitally. I will use the ubiquitous hyperlink feature as our main example of affordances and signifiers in the digital space. The affordances of the hyperlink are: (1) it can be clicked on, and (2) it gives access to information by opening a new tab/pop-up window. Its signifiers are: (1) its underlined text, (2) its contrasting font color and/or italics, and (3) how the cursor transforms when it is hovering over the hyperlink (see image below). These can be seen as the basic principles for User Interface (UI) design, informing the user what they can do with the emergent graphical language. Even though video games use these types of affordances and signifiers in instances like save screens a video game uses more types of affordances and signifiers than a save screen, because video games are facilitating, as a whole, a different, more complex type of design experience.

Given the multitude of game typologies that can be grouped and categorized in a myriad of ways, I would like to clarify that moving forward in this article, whenever I use the term “game”, I will be referring to avatar-mediated games, be them 2D or 3D worlds, unless otherwise noted. This distinction is important to note for the purpose of this article, as there are some types of games, like Candy Crush, and game instances like menu screens, that instead of relying on an avatar to operate the game space, are greatly limited to the affordances and signifiers of the UI design to be navigated (i.e., they normally require touching the screen at a specific location) (see image below).

Affordances & Signifiers in the Game Space

Let’s dissect how affordances and signifiers work in/apply to a game by taking a portion of a level from Super Mario Bros. as our introductory case study. The famous “? Block” in this level has an affordance and a signifier. The affordance of this block is to give a reward to the player when being hit specifically from the bottom up. The signifier is the “?” mark on the block, which captures and triggers the player’s curiosity to prompt an interaction (see image below).

We can see these same basic structures of affordances and signifiers used in diegetic elements (elements with their source within the game space) and non-diegetic elements (elements where its source is not part of the game space) in other games. Let’s take the latest God of War game as an example. The first image (see below) shows a UI icon (a non-diegetic element) over a chest that signifies to the player that the chest affords to be opened. The second image shows how runes on a ledge (a diegetic element) signify to the player that this particular portion of the ledge affords climbing.

Furthermore, there is something very interesting in games like the Assassin’s Creed franchise when it comes to how the designers apply this signifier-affordance structure in their games. In Assassin’s Creed games, the designers expect players to apply their game with a basic understanding of how the real-world functions in order to operate and successfully navigate the game. For example, if you see a ledge on a building in real-life, you reason and draw from experiences that if you can grab on to that ledge and are fit enough to pull yourself over the ledge, you are able to climb the building. The signifiers the designers use in-game are the same signifiers we encounter and interpret to navigate the real-world.

Cognition

Our interactions with something designed inherently involve the examination and interpretation of signifiers, which help us understand the design’s affordances, which in turn allow us to successfully operate the design and achieve our goals with it. The “examination and understanding” of signifiers require cognition. Cognition is the mental process of acquiring knowledge and comprehension through thought, experience, and the senses. Some cognitive processes include reasoning, knowing, remembering, judging, problem-solving, among others. The “amount” of cognitive processing required by a person to execute a task successfully is known as cognitive load. For example: how much information needs to be taken in and remembered? How much attention has to be paid? How much planning or imagination needs to be implemented to solve the problem? Among many others. When we successfully operate a designed object, we are effectively interpreting its signifiers and operating its affordances, each step requiring different combinations of cognitive load and processes. The combination of cognitive loads and processes is known as cognitive resources. When this successful, sequential use of cognitive resources to operate an object happens, I like to think of it as a successful “cognitive flow”.

We can conceptualize the development of cognitive flow for a particular design’s operations in two axes: 1) amount of cognitive resources being engaged with, and 2) number of interactions with the design in question. In the very first few interactions with a design, the brain is allocating a very large number of cognitive resources trying to figure out, as fast as it can, how to interact with and operate the design efficiently. This over-commitment of cognitive resources results in the overwhelming focus a person feels when trying to comprehend and execute basic interactions with a new design. Think about learning guitar for the first time or learning how to properly hold a new game controller. The more someone interacts with the design, the more comfortable they get with it; what initially required a lot of cognitive effort becomes progressively easier to handle as the person interacts more and more with the design. With more interactions, the more opportunities for the brain to improve the use of specific cognitive resources needed to engage with the specific design again, resulting in a declining, evermore fine-tuned cognitive flow until it somewhat plateaus. This “plateauing” is the point where the brain has already figured out, for the most part, how much and what type of cognitive resources it needs to deploy when operating the design. This gradual descent in the need of cognitive resources to engage with a design is what I am referring to as cognitive contraction (see image below).

We all know how this feels! It’s progressive mastery over learning how to skateboard and finally landing that kickflip, the ease that wasn’t all there when playing the guitar scales the day before, or when you are able to finally understand the game level so well to clear it in record time. It’s the feeling of progression, comprehension, and mastery. This is important as humans have a limited capacity on how much cognitive resources can be processed at any given time (110 bits/s). The process of cognitive contraction permits us to fine tune the cognitive flow to efficiently manage this limitation. Just like playing musical instruments, for example. You have people that can barely play a very basic, rudimentary one-finger arrangement of “Twinkle, Twinkle, Little Star”. Despite all their focus, it takes everything they have to just perform the right sequence of notes at tempo when they are starting out, while there are others who can masterfully, not only play one instrument, but a number of them, at the same time!

Mastering a skill or understanding how to successfully operate a design reflects that the brain has learned what specific cognitive resources it needs to deploy (not more and not less) whenever engaging with the design again, and the user has created expectations on how to interact with the design with those exact cognitive resources. However, when the experience becomes rather frustrating, doubtful and disorienting, it’s a sign that the user is moving away from a successful cognitie flow. This usually happens when the design’s signifiers are no longer clearly guiding the user, something is not working as expected and the user does not know what to do next. The brain then focuses all cognitive resources into figuring out this malfunction, thus disrupting the previously established cognitive flow with a sudden surge of cognition aimed at resolving the issue as fast as possible. This disruption of the cadence of the cognitive flow is what I am calling cognitive expansion (see image below). The expansion usually surges up and back down in the span of an instant, easily resolved by quickly recognizing what the misconception was. However, if the expansion is not resolved immediately, the user starts to get frustrated; for some it might just take a few minutes to completely give up on the design all together and never come back to it, while others might take up the challenge and actually figure out what their misconception was to restore their cognitive flow. Unfortunately, at that point, if any user has to endure such friction to understand how to operate a design, we can safely say that the signifiers are not well designed.

For example, we have all encountered the public glass door that has the same type of handles on both sides of the door. Imagine you are walking towards one of these doors while speaking with your friend beside you and texting on your phone at the same time (remember that these are actions that you have efficiently finetuned the cognitive flow for through years of interaction and repetition; you are a master in each one of them). You reach your hand to grab the door handle and pull, but the door is stuck (see image below). Your brain instantly intensifies its focus on just the door. You forget about your friend, the phone and most of the world around you; all you see is the door. You immediately ask yourself: “Is it locked?” “Is it broken?”. You start giggling with embarrassment as suddenly, the only thing your brain is focusing on is on trying to figure out why the door is not working as you expected. Your long-worked on, finely tuned cognitive flow that reflected your mastery through ease and comfort when opening a door has been disrupted. Then, intuitively, in a desperate attempt, you push the door, and to your ease, the door swings open and you are relieved that you do know how to open a door, while publicly redeeming yourself. Even though this episode was brief, almost instantaneous, the cognitive expansion you went through was palpable enough that it’s memorable. That instance where your brain felt like it was glitching and it could only think of the door until you figured out how to open it, that feeling is the result of what I am calling cognitive expansion.

Flow State

Maintaining a consistent and developed cognitive flow is important, as any disruption can turn any experience with a design in the wrong direction. There are two main ways in which an experience can be thrown off track according to the theory of Flow State: anxiety or boredom. The idea of the “flow state” was introduced in the psychology field by Mihaly Csikszentmihalyi in his book “Flow: The Psychology of Optimal Experience” in which he presents that the flow state is the optimal state of mind when performing a task. The task could be anything: playing sports, knitting, gardening, hunting, doing the dishes, playing a game, etc. When a user achieves the flow state, they generally have high levels of focus on that task, lose track of time, lose feeling of self-consciousness, have a clear understanding of what needs to be done and how to make it happen, and feel a sense of control over the situation. Making users achieve this “flow state” through a design is the holy grail of every designer, not just game designers.

The flow state defines every task to be understood by its development in two axes: 1) challenge presented to user, and 2) current skill level of user to meet that challenge. If the challenge is greater than the user’s skills, the experience becomes overwhelming, and frustration and anxiety bubble up to the surface (see image below – top graph). On the other hand, if the user’s skill level is greater than the challenge presented, the experience becomes underwhelming and too easy, thus resulting in boredom and dissatisfaction (see image below – bottom graph). Neither scenario works to generate an engaging meaningful experience for the user, on the contrary, both aid in the creation of the very dual anti-thesis of the flow state itself.

What every designer needs to aim for in order to achieve the flow state is this “holy grail” balance, where skill level matches perfectly the challenge presented to the user. I argue that all of this can also be understood in relation to how much cognition is required for each of the anti-thesis scenarios. If challenge is too high, that means a higher than usual amount of cognition is needed to grasp and resolve the challenge presented. If the skill is too high, then too little cognition is required, and the brain does not feel engaged or interested in the task as it can do other things too. However, when achieving the right balance, when just the right amount of cognition is required so that the brain needs to be fully engaged without being overwhelmed, that is when the phenomenon of the flow state is generated and experienced, a successful cognitive flow (see image below).

Part 02: Relational Joints Model

Relational Pathway & Joints

In my Relational Joints Framework, the concepts of affordances and signifiers play an essential role in defining and generating the model's basic units. Generally, the model breaks down the overall design experience into interfaces that help us create a basic skeleton or road map of the design and allows us to understand more efficiently how we inhabit and navigate design interactions.

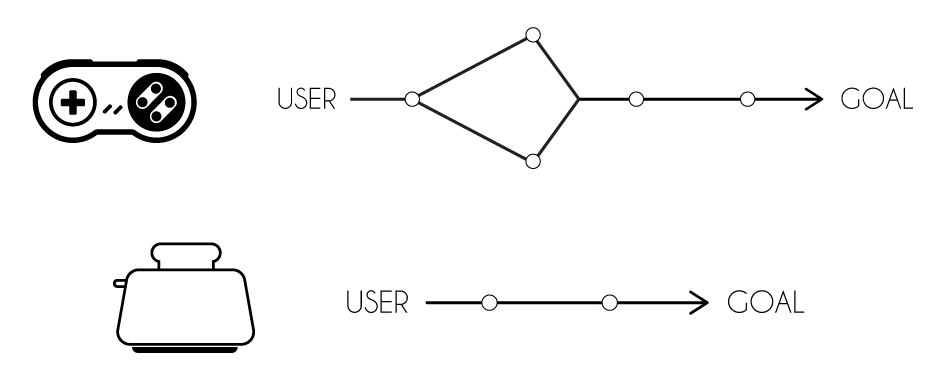

Every time we interact with a design, be it a toaster, phone, or game, we have an ultimate goal that motivates the engagement with the design, like eating toast, making a phone call, or getting a few hours of immersive entertainment. Let’s then begin building the Relational Joints Framework representing this user-goal interaction in a diagram using an arrow line going from the “user” to the “goal” (see image below).

In the Relational Joints Framework, we think of the engagement of the user with the design as “a point of interaction” between each of the design parts, as distinct entities have to successfully interact with each other to achieve a specific goal. For example, in order for us to make toast, we HAVE to correctly manipulate the toaster. Therefore, there is an interface between the user and the toaster.

Identifying interface points is essential to begin building the design’s skeleton, as they show where the user needs to apply cognitive resources to successfully interact and manipulate the design’s affordances. These interfaces are therefore the basic units of the Relation Joints framework, and we will be calling them “relational joints”. On the other hand, we are calling the sum of all components that are necessary to achieve the specific goal of the design (including the user, all the relational joints, and the goal) a “relational pathway” (see image below).

Constructing a Relational Pathway

Let’s build our first relational pathway using our toast example. First, we need to define the number of relational joints for this design experience, which we do by identifying all the elements involved in the design, the resulting instances of affordances that need to be understood, and the signifiers that point to the specific actions between the elements on each relational joint. The first relational joint occurs between the user and the toaster, and the second is between the toaster and the bread, for a total of two relational joints in its relational pathway (see image below). Each relational joint is representing a point where the user needs to identify and understand the affordances, and interpret which ones are the appropriate ones to act upon through its signifiers.

Once we have identified the number of relational joints in a pathway, it is important to determine how complex those “interfaces” are via its signifiers, meaning how many interactions need to be interpreted, understood, and successfully operated in order to navigate through each relational joint. For example, the first relational joint between the user and the toaster requires the user to manage at least the following information:

Correctly plugging the toaster to the power supply

Knowing the function of the buttons and levers and operating them appropriately

Positioning the toaster in the correct orientation

The second relational joint between the toaster and the bread, requires that the user understands and manages the following information:

Bread is to be placed in the slot

Bread is to be lowered by the platform

Metal coils will heat up to toast the bread

An auditory signal is to be waited for

Bread will be ejected from the toaster when ready

Aside from identifying the specific interactions that go into each relational joint and giving us a structure to organize the overall design experience, this framework gives us the foundation to begin exploring how much cognitive resources and what specific cognitive processes are needed at specific instances of a design experience. In our toast example, the first relational joint is centered around manipulating mechanical features and spatial navigation, while the second relational joint introduces auditory interpretation.

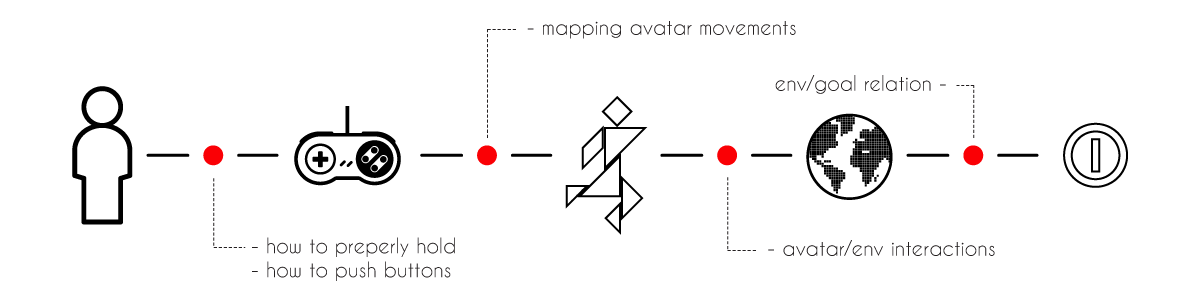

A Game’s Relational Pathway

Now that we understand how to generate the basic units of the model and assemble a relational pathway, let’s construct one for an avatar-mediated game. The first relational joint in a game is between the user and the controller; the user must understand how to properly hold the controller, push the buttons, and operate joysticks. The second relational joint is between the controller and the avatar; the user must understand how the controller maps to the avatar’s actions (e.g., press X to jump, O to attack, etc.). The third relational joint is between the avatar and the game environment, in which the player must understand the avatar’s abilities and limitations to navigate the environment (e.g., can I swim, climb, open doors, etc.). The last relational joint is between the environment and the goal, in which the player must understand how the environment relates to the goal itself (e.g., is the artifact inside the chest, resource under a rock, weapon on an NPC, etc.). Once all of these joints are taken into consideration, we end up with a Relational Pathway for games that looks somewhat as the (see image below).

Relational Pathway’s Basic Structure and Dynamics

I would like to start this section by explaining the basic structural principles and performance dynamics that this framework helps us to visualize and model, which reflect upon how we inhabit and navigate a design. We will briefly discuss the concepts of sequence of the structure and navigational dynamics, which I deem necessary to understand how we navigate a design.

An essential element of the Relational Joints Framework is the term sequence of the structure, which refers to the fact that, in each relational pathway, there is a specific sequence of relational joints that cannot be changed, an existing hierarchy that needs to be followed. For example, a player that is cognitively engaged in playing a game cannot inhabit and navigate to the second relational joint (which requires understanding how the controller maps to and controls the avatar) if he doesn’t first understand how to properly hold the controller. This phenomenon is true for all of the relational joints within a relational pathway; they cannot be interchanged; they need to be followed in a very specific order (see image below).

Another essential concept of this framework is the term navigational dynamics that refers to the way we navigate the relational pathway of a design, which we can describe as a “loop and slide”. Let’s refer again to the relational pathway of an avatar-mediated game to explain this concept more clearly. Initially, the player needs to navigate through the first joint (user-controller), which requires the use of specific cognitive resources that allow for proper use of the controller (i.e. hold the controller and push its buttons). At this point, we can say that the player is looping through that first relational joint and managing only the cognition required at that joint. When the player moves on to try and manipulate the avatar with the controller, he has now slide onto the second joint (controller-avatar), but that doesn’t mean that he is no longer engaged with the first joint as well. As the previous concept of sequence of structure established, the second relational joint is built upon the first one, which means that the player is now engaging with two joints and their respective required cognitive load at the same time. In this way, the loop and slide dynamic continue as the player moves back and forth along the game’s relational pathway.

Another important point to note is that the process of navigating a relational pathway is not static. On the contrary, navigating a relational pathway is actually a very dynamic experience, as the player is constantly moving back and forth between and across joints. When the player takes up the controller, he is inhabiting the first joint. When he then starts moving the avatar, he has officially navigated onto the second joint. Once he is deep into the exploration of the game’s environment, he has officially moved on and inhabiting the third joint. When he approaches a chest on top of a building, he is now inhabiting the fourth joint. When he acquires the goal and goes down the building and starts exploring the environment again, he has moved back to the third joint, and so on so forth depending on what the player is doing in the game at any given moment (see image below). This dynamic reflects that the cognitive requirement of a relational pathway is never static, certainly has a minimum and a maximum of interactions, but there is never a constant cognitive requirement from the game, it’s always in flux. This phenomenon brings up an interesting question: which joint is the most inhabited on average when playing games? This will help setting up the basis of the game’s cognitive requirement and help us to make better designs.

The Real Game’s Relational Pathway Model

Up to this point, I have not mentioned the game camera at all, which is an essential element in an avatar-mediated game world. The reason I waited up to this point to introduce it into the relational pathway is because I wanted to keep the introduction of the model as simple as possible. However, the camera is an essential element of a game and merits its own relational joint due to its independent but interrelated role with other elements in the game, which in turn articulates the pathway that we have been working with considerably. Cameras need to be designed, they can move independently from the avatar, interact with the avatar and environment, have their own capabilities and functionalities, and have their own set of rules to follow, interactions and roles in the experience. Now, where exactly does the camera fit in the pathway to depict its relation to the rest of the game? One can start thinking it should be directly related to the avatar, as the avatar is usually its anchoring point and focus. That offers two possibilities of placement: either between the controller and the avatar or between the avatar and the environment. However, neither of these completely satisfy the accurate relation of the camera to all three of these joints. The camera is guided by the controller but interacts with both the environment and the avatar. Therefore, taking all these things into consideration, the most accurate placement of the camera joint in the pathway is parallel to the control-avatar’s joint, which substantially modifies the basic game pathway we were using so far, furthering our understanding of the complexity of navigating such deign spaces. The end result is having the model bifurcate at the control joint to generate the control-avatar joint and the control-camera joint (see image below). At the same time, these two joints bifurcate as well, forming 1) the avatar-camera joint, 2) the avatar-environment joint, and 3) the camera-environment joint, thus generating a relational pathway model of playing games as the one shown in the image below. The inclusion of the camera joint in this relational pathway allows us to visualize, structure, and understand with more precision the design experience of a game.

Part 03: Model Application

In this last part of the article, I present the various ways that the Relational Joints Framework can be used as a design tool to create better experiences, and to develop and expand the analysis and understanding of how users inhabit and navigate a design.

Taxonomy of Categories

Language is a fundamental design tool as it helps us to capture and accurately define and discuss design concepts. With this in mind, in the Relational Joint framework, I introduce terminology, such as “relational joints” and “relational pathway”, that allow us to talk more accurately not just about the design itself, but how users inhabit and navigate it. With these concepts, we can further dissect and deconstruct the overall design experience and identify commonalities between its parts that can be grouped into categories. For example, because we can dissect a game into relational joints, it is now easier to identify that the entire relational pathway can be divided in two categories: one including the first three relational joints, (user-control, control-camera, control-avatar), which are centered around interfaces, and one including the last four relational joints (avatar-camera, avatar-environment, camera-environment, environment-goal), which are more centered around game mechanics (systems of interactions) (see image below). In general, in the first category, the player needs to understand how to interact with the game systems and controllers. In the second category, the player needs to understand the mechanics of the world in order to navigate it successfully. This categorization is not meant to be absolute, as one can identify elements of interface and mechanics present in each of the other categories. Rather, this categorization is based on the most common type of interaction observed in each group and comes in handy to create heuristics of design. For example, a designer should design the game’s interfaces in a way that do not tax the player’s cognition unnecessarily, leaving players with less cognitive resources at their disposal to manage the game mechanics in an enjoyable fashion.

Comparing relational pathways

It is evident that every design experience is different, but it could be challenging to pinpoint and articulate how those designs differ from each other and why. For example, the experiences of making toast and playing a game are undoubtedly different, not just in the nature of the activity but also in their cognitive requirements. The Relational Joints Framework can be useful in conveying why these experiences are different by comparing the number of relational joints and the respective cognitive requirements across designs. The relational pathway of making toast vs the relational pathway of playing a video game differ in number of relational joints, which implies that one experience is more complex in nature and more cognitively taxing than the other. Additionally, the comparison of relational pathways can be useful not just in comparing design experiences that are fundamentally different, but also facets within the same design. For example, through the development and analysis of a relational pathway, we can now dive into the differences and nuances of the experiences of playing in the game world vs navigating its menu or saving screens. Therefore, having the ability to breakdown a design experience into basic units like relational joints offers us the initial tools to quantify, compare and discuss the overall complexity of designed experiences more objectively.

Cognitive Measurement

In this section we will be addressing the quantification method we are currently using in the Relational Joints Framework to understand how cognitive resources are distributed across relational joints. We will use examples that will model how the Relational Joints Framework serves as a tool to analyze the cognitive distribution across relational joints throughout the entire design experience.

Articulation

Let’s quickly address how we are managing the difference in the number of relational joints between relational pathways and why is it important for the model’s current interpretation. The more relational joints in a relation pathway, the “more articulated” I say it is, (1) because of the number of relational joints that the designer must take into consideration in order to achieve a good design, (2) because of the number of relational joints that the user needs to navigate through to successfully operate such design. Similarly, if a relational pathway has comparatively less relational joints than another, the “less articulated” I say it is (see image below). Therefore, we can assume that a more articulated relational pathway will require a greater cognitive load than a less articulated pathway. However, the reason why I have decided to refer to the potential differences in cognitive load between a “more articulated” and a “less articulated” pathway as “articulation” instead of “cognitive taxation” is because I have not yet scientifically quantified the actual difference in brain activity between designs that have more or less articulated pathway. These are experiments that will be necessary in the future, because, while the term “articulation” allows us to theoretically determine the cognitive requirement of a relational pathway by the number of relational joints, direct quantification of the possible differences in cognitive load between a more or less articulated pathway is essential to determine exactly how difficult/complex a design experience is.

Humans, for example, can handle 110 bits of information per second on average. Therefore, any experience that requires more effort than the 110 bits per second limit will potentially be too demanding and result in an uncomfortable experience. Therefore, determining the specific cognitive load for each relational joint within a relational pathway will help us define how we can structure and categorize all the required interactions in a specific design experience for the user to navigate it more efficiently.

Analyzing Cognitive Distribution

Because humans have a limited cognitive capacity, having a model that allows the designer to depict the specific instances of a design that require cognition is extremely useful, as now they can easily assign value and determine the type of cognition needed in those specific points. This is exactly what the Relational Joints Framework does! With the Relational Joints Framework, the designer is now able to thoroughly analyze a design and determine whether the experience as a whole is overwhelming or underwhelming at any given moment. Assuming that overwhelming or underwhelming experiences are directly related to too much or too little cognitive engagement, the Relational Joints Framework provides a sophisticated view of the design structure with which the designer can locate where the cognitive requirements of any relational joint are not being met (see image below).

Although our first approach may be to visualize the cognitive effort of a design experience as a static load, we have to remember that the way we inhabit a relational pathway is dynamic (“loop” and “slide”). The Relational Joints Framework also provides the advantage of visualizing user performance dynamics with which the designer can approximate the amount of time the user will spend on each relational joint, in addition to the time spent looping and sliding across relational joints. These approximations will inform the designer of how far or close the user lands on the margins of a flow state while engaging with the design (i.e., overwhelming, optimal, or underwhelming).

Another scenario is, as mentioned previously, when the user experiences the design for the first-time vs when they have mastered the design. This is important as the designer has to ensure that the experience will not overwhelm the user in the very first interaction where the cognitive load will be the highest, but also not bore them when the user becomes efficient with the required cognitive load. In this sense, the Relational Joints Framework provides the basis to identify elements in the design that could be contributing to too much or too little user cognitive engagement. This scenario represents a great challenge for designers, but when they overcome it successfully, they create an experience that users can enjoy for long periods of time even when they have become efficient with the design.

Designing Cognitive Distribution

Let’s now see practical examples that show how we could use the Relational Joints Framework to finetune the distribution of cognitive requirements within a design to achieve better experiences, products, and games. Once we have constructed our design’s relational pathway, we need to assume that each relational joints is going to require a minimal amount of cognition to be navigated. Further, each relational joint is going to require additional cognition at each joint depending on the particulars of the design. For example, let’s say the game studio wants to include a totally new controller design, which will require users to learn how to manage correctly (i.e. VR headset where players will need to understand where all the buttons are and how exactly the new ergonomic language works) (see image below).

This means that our design is going to require more cognition in the first half of the relational pathway, the one relating to interfaces, specifically at the user - control and control - avatar joints (see image below). For design purposes, this hints that the remaining relational joints in the pathway should be made to require less cognitive effort to balance out the total cognitive requirement. This could mean deciding to create a relatively simple game based on well-known conventions, genres, and mechanics to let the player’s cognition focus on the novelty you are introducing at the forefront of the gaming experience.

What would happen, though, if the scenario is flipped? The studio had an awesome idea to create a completely new genre or an innovative mechanic that will revolutionize the gaming industry. That means that the second half of the relational pathway (the one containing the relational joints of avatar - environment and environment - goal) now requires greater cognition engagement from the player. Design-wise, we should then make the rest of the relational joints in the pathway require as little cognition as possible to balance out the total cognitive requirement. This means that we might need to avoid introducing any novelty of control mapping or control designs or any other aspect of design that might be categorized under the initial half of the relational pathway (see image below).

Cognitive Engagement Curve Typologies

Once we are able to break down a design experience into its basic units and directly relate the cognitive requirements of each of these units, we can start observing generalizations of performance and experiences across relational pathways according to their cognitive distributions. In this sense, the Relational Joints Framework allows us to build cognitive engagement curves to map the cognitive requirements of a relational pathway that help the designer to characterize and define design experiences.

Let’s look at some hypothetical relational pathways and how they could shine a light into further understanding some well-known design experiences. For example, when we have a relational pathway with little cognitive requirements at the start (the interface portion, where player is familiar with controller’s scheme), but greater cognitive requirements at the end (the mechanical portion, where the player needs to discover and understand the game’s mechanics), we can say that the experience increases in complexity and skill. If we were to represent this cognitive requirement in a curve on top of a diagram of the relational pathway, we would end up with a line that rises towards the end of the relational pathway, thus creating an ever-increasing curve from beginning to end. In the following image, we are representing a hypothetical relational pathway with four relational joints (relational joints w, x, y, and z). As we discussed previously, each relational joint has a specific cognitive requirement, which we are representing as circles around each red dot (relational joints). If we were to graph the cognitive requirement of each relational joint in a curve above the diagram of this hypothetical relational pathway, we would end up with a curve that is steeper towards the end of the relational pathway.

Let’s now analyze the cognitive engagement curve of a relational pathway that has high cognitive requirement in the initial relational joints, but lower cognitive requirements at the end (the inverse). This example shows a scenario where the cognitive requirements of the initial relational joints of the relational pathway create a high entry barrier scenario, where the user needs to take in a lot of information before starting to play the game or is unfamiliar with how to handle game’s controller. If we were to plot the cognitive engagement curve for this relational pathway, we would end up with a graph that is higher at the beginning of the relational pathway but decreases towards the end (see image below). This scenario can be found, for example, in board games and is referred to as the “high-entry-barrier”, where players first need to understand game rules, types, roles and location of pieces before even attempting a first rudimentary play of the game.

Let’s explore how other cognitive engagement curves could look like if we change the cognitive requirements of each relational joint in the relational pathway we have been using as an example. What would it mean, in terms of cognitive requirements, if we had a cognitive engagement curve that is constantly high (a straight-high line)? This scenario may reflect a very overwhelming experience, one that requires the player to use a lot of cognitive resources throughout the entire gaming experience. What about a straight-low line? This scenario may reflect a very boring, underwhelming experience. Let’s now consider a concave or convex curve. A concave curve may reflect that the experience requires high cognitive load at the beginning and end of the relational pathway, whereas a convex curve may reflect that the experience requires less cognitive load at the beginning and end of the relational pathway. A non-symmetrical distribution of cognitive loads will result in a more complex curve (see image below).

As we can see, cognitive engagement curves allow us to not just quantify the cognitive performance needed to engage with a design, but also to visualize when and where those cognitive requirements need to be adjusted, thus allowing for more in-depth discussions and analyses of designs. Cognitive engagement curves also serve as a vehicle to start forming more concrete and accurate terminology of design experiences, which we can use to refer to specific design scenarios and decisions.

Design Tool Harnessing: Cognitive Expansion

As discussed in the previous sections, the Relational Joints Framework gives us the tools to visualize and harness the nuances of cognitive dynamics as we navigate each step of the design experience. This helps us to analyze and visualize the design’s cognitive flow in more detail, while simultaneously allowing us to fine tune and manipulate it at a more sophisticated level. In the next example (see image below), I will present to you a design experience that includes an instance of cognitive expansion (red spike on the curve).

As we discussed earlier, instances of cognitive expansion can have very dire consequences on the player’s experience, but the Relational Joints Framework allows the designer to capture the exact point in the design where such instances happen, thus opening up the possibility to balance out the cognitive expansion instance with other design components that require less cognitive effort. Therefore, this framework helps us to have a more sophisticated reach and understanding of how to guide the user’s cognition, which in this particular example would be harnessing the focus aspect of the cognitive expansion, while mitigating the rest of the consequences.

In this example, we are able to specifically ask questions like “where” did the cognitive expansion happen? How intense was it? For how long did it last? The answers to these questions will guide us exactly to the issue within the design and provide a better understanding of the parameters that generated the cognitive expansion. Understanding what exactly triggers a cognitive expansion, gives the designer control over the elements that surround such cognitive expansion (see image below). In this sense, relational pathway resembles a navigational road map, because it allows the designer to zoom into each relational joint to isolate specific conditions in the design experience with the purpose of identifying, exploring, and questioning the required cognitive effort that will inform decisions that are essential to achieve the design’s vision.

A question that comes to the surface is why would we want to allow a frustrating and doubtful event to deliberately happen in a design experience? The answer to this question is that, with the Relational Joints Framework the designer is, at least theoretically, in control of when such cognitive expansion happens. As we explained previously, a cognitive expansion involves intense focus, such that makes you forget what you were doing and forces you to tune out everything else around you. Focus is a very desirable tool in design because it allows the designer to guide the sequence in which the user experiences the design. For example, we could make players focus on a specific area or element in the new game level because it contains an important clue to navigate and win the level. In the case of other design disciplines, designers could make users look at features of an object in a specific order, thus guiding them on how to operate it. There is a myriad of ways and techniques to generate and guide user focus, such as disruption of expectations (i.e. a door that does not open) and contrast against context (i.e. a white spot in a black wall), which are two of the most successful ones. The Relational Joints Framework allows designers to breakdown the design in such way that they can thoroughly analyze how intense and lengthy the disruption can be, and at what specific moment in the experience should they introduce the contrasting elements to best guide the focus of the user. This level of control in the design process is instrumental to achieve a refined and sophisticated design without having to rely heavily in the designer’s intuition or trial and error processes.

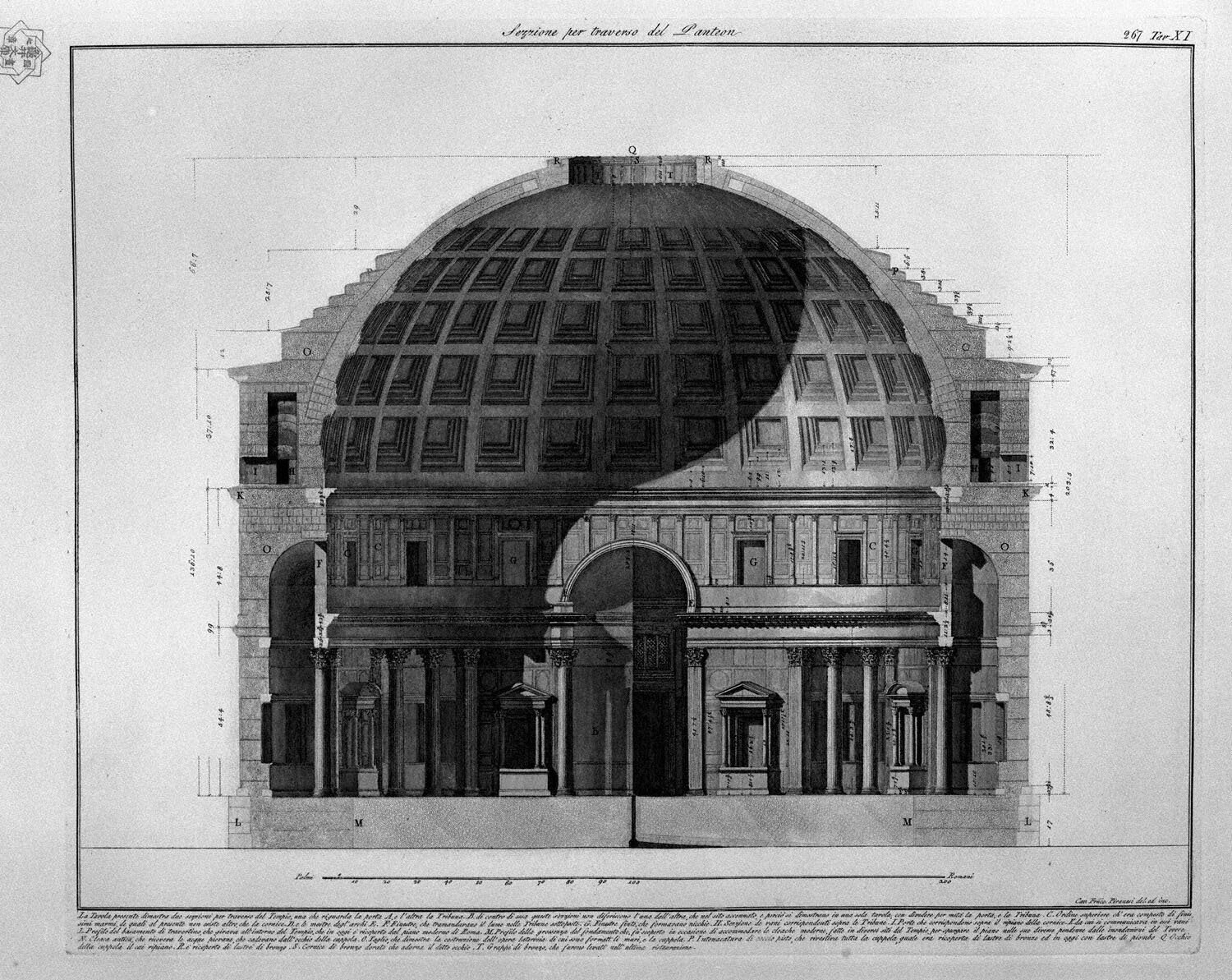

Joint Cross-sections

Up to this point, we have discussed the Relational Joints Framework in terms of its ability to break down a design into essential interfaces (relational joints) and how these allow us to understand the distribution of cognitive requirements across the design. However, there are a myriad of elements that work together to make up each relational joint that are not necessarily explicitly stated in the general diagram of a relational pathway. For example, within the user-controller relational joint, it is not obvious that one has to consider controller ergonomics. For this reason, in this section we will introduce a tool that facilitates the analysis of the specific elements of a relational joint.

An essential skill that I have developed as an architect is how to visualize designs in an analytical fashion. For example, architectural projects are complex designs, therefore, architects break them down into isolated layers of information using various conceptual tools, one of them being the “cross section”. Cross sections allow architects to choose a particular instance of the design along one of its axes and create a parallel view of that cut plane (se images below). With cross sections, we can focus our attention onto a very specific instance in the design to understand how its structure, design elements, and dynamics affect the user experience.

I have adopted and incorporated the principle of cross sections into the Relational Joints Framework and developed what I am calling “Joint Cross Sections”. The purpose of joint cross sections is to focus on a specific relational joint to 1) identify all the instances of the design that belong to that relational joint, 2) understand the impact of each of these elements within its relational joint, and 3) their interactions within the design’s ecosystem. Ultimately, joint cross sections help us to work with the design at a more granular level without losing the sense of where each design instance belongs to, thus freeing us from having to keep track of those interactions in our heads while trying to analyze and explore the design at the same time. For example, how is a specific element within a relational joint related to all the design instances and elements around it? With the tool of joints cross sections, we are able to tell how far apart design instances are from each other, which will help us gauge and clarify how and why they affect each other.

As you become a master in applying the Relational Joints Framework in our design process, you will notice through the exercise of identifying which design instances belong within each relational joint, a pattern of certain design instances belonging to specific relational joints every time they come up, no matter the design. For example, anytime we talk about ergonomics, all design instances relating to the controller, no matter the game, will always fall under the user-control joint. This is important as it narrows down and informs us about the specific design instances, we would need to take into consideration to address a design issue.

If we look at the user-control relational joint, we will find that design instances related to controller ergonomics are very specific to this relational joint only. What is the purpose and design of the controller? Is it made for efficiency and comfortability; therefore, all buttons can be reached comfortably? Or is it a collectible piece where its primary intent is to be a curiosity rather than an efficient, practical tool to navigate the game?

Similarly, if we focus our attention to the issue of visual scope, we will realize that it is very unique to the control-camera joint. Is the camera giving the player a wide look of the world, thus letting them take in the overall game’s environment, while also allowing them to see enemies approaching from a far? Or is the scope more limited, narrower, and focused? How much control does the user have over the camera for each instance?

Regarding the control-avatar joint, we are able to identify the particular design instance of control mapping. How is the controller interacting and manipulating the avatar? Is it by button press, is it by tilting and kinetic gestures, is it voice commanded? If it is by pushing buttons, does it follow any cultural conventions (i.e., pressing X triggers a jumping or dashing action)?

If we look at the avatar-camera joint, we can see that the position of the camera in relation to the avatar generates different design experiences. Do we want the camera to be far away from the avatar showing it to be a small, independent entity following commands, or do we want to establish some kind of emotional connection by placing the camera right behind the avatar, so the player sees the world form the avatar’s point of view?

In the avatar-environment joint we are able to specifically identify design instances pertaining to possible interactions with the game world. Is the avatar able to open portals in concrete walls, slash objects, stick and climb walls, swim, etc.?

On the other hand, when we focus on the camera-environment, we can identify design instances such as camera limitations by the environment. Is the camera bounded by the environment? Is the camera inside the environment, having a first and third-person view, or is it above the environment having a “god view”? All of these affect the player’s perception of the game.

Finally, if we look at environment-goal joint, we have design instances relating to aspects such as how much scope of the environment are you making the player consider in order to acquire the goal? How is the problem to solve being communicated through the environment?

For some universal design instances and issues that can arise across the entire relational pathway or at various joints, having them contextualized at specific points in the design, and understanding why they happen can lead us to generate predictions and obtain a better understanding of their specific impacts in the experience. For example, let’s look at glitches. Glitches can happen at any given moment in the design of a game, but it does have a different degree of effect in the experience depending on where they happen. Glitches are annoying whenever they happen and affect the user’s experience because they require more cognitive effort to understand and figure out what is wrong. However, glitches at the joint of avatar-environment seem to be the worse in comparison to all the other joints because they break the flow of the game and are the hardest to adapt to.

Conclusion

Designing games is an intrinsically complex process, and the tools to facilitate the analysis of the overall interactions among the design elements are limited. I developed the Relational Joints Framework with the purpose of providing designers with a method to breakdown the design into its essential interactions to facilitate the analysis of the design experience. The detailed skeleton of the design experience that this framework helps to generate, allows the designer to visualize the design more clearly in terms of its sequence and hierarchy, and provide the foundation to model how a design is cognitively engaged with by the user at specific instances. In this sense, the Relational Joints Framework does not only allow the designer to generate a ‘road map’ of the design, but also provides design tools that add analytical capabilities to model how a user navigates and cognitively inhabits the overall design. For example, the framework allows the designer to locate and visualize interactions among design elements within a relational joint, but also across all relational joints within a relational pathway, thus creating the opportunity to zoom into specific design interactions that would otherwise be difficult to establish. Therefore, this framework gives designers the ability to harness design elements in order to generate a more fine-tuned and granular understanding of what may cause the user to deviate from the flow state and equips them with the conceptual tools to identify and manipulate those elements. Moreover, the descriptive terminology of this framework will help designers to designed experience more accurately and in more detail.

In a broader scope, the Relational Joints Framework introduces an objective method to compare designs against each other via number of joints, possible overall cognitive requirements as well as at specific joints. This framework does not just have modeling and explanatory capabilities that permit us to understand user-design engagement in a new light, but also generates a number of conceptual design tools that give designers greater precision to construct and mold experiences to achieve the desired outcome. The implications and applications of this Framework are vast given the utility of its methods of analysis and design across design disciplines, which include but are not limited to game design, architecture, and product design.

If you are interested in using this model for your project or research I welcome collaborations, or if you are interested in sharing your thoughts, ideas and comments about this model, feel free to contact me directly here. Write me a message and I will receive it directly in my inbox. I would also like to note that imagery used in this article are not produced or owned by me, except for all the graphs which are produced and owned by me.